Alright folks – we’re going to dive into how you can leverage Azure Cognitive Services with PowerShell to not only set up AI services but also to interact with them. Let’s go!

Prerequisites

Before we begin, ensure you have the following:

- An Azure subscription.

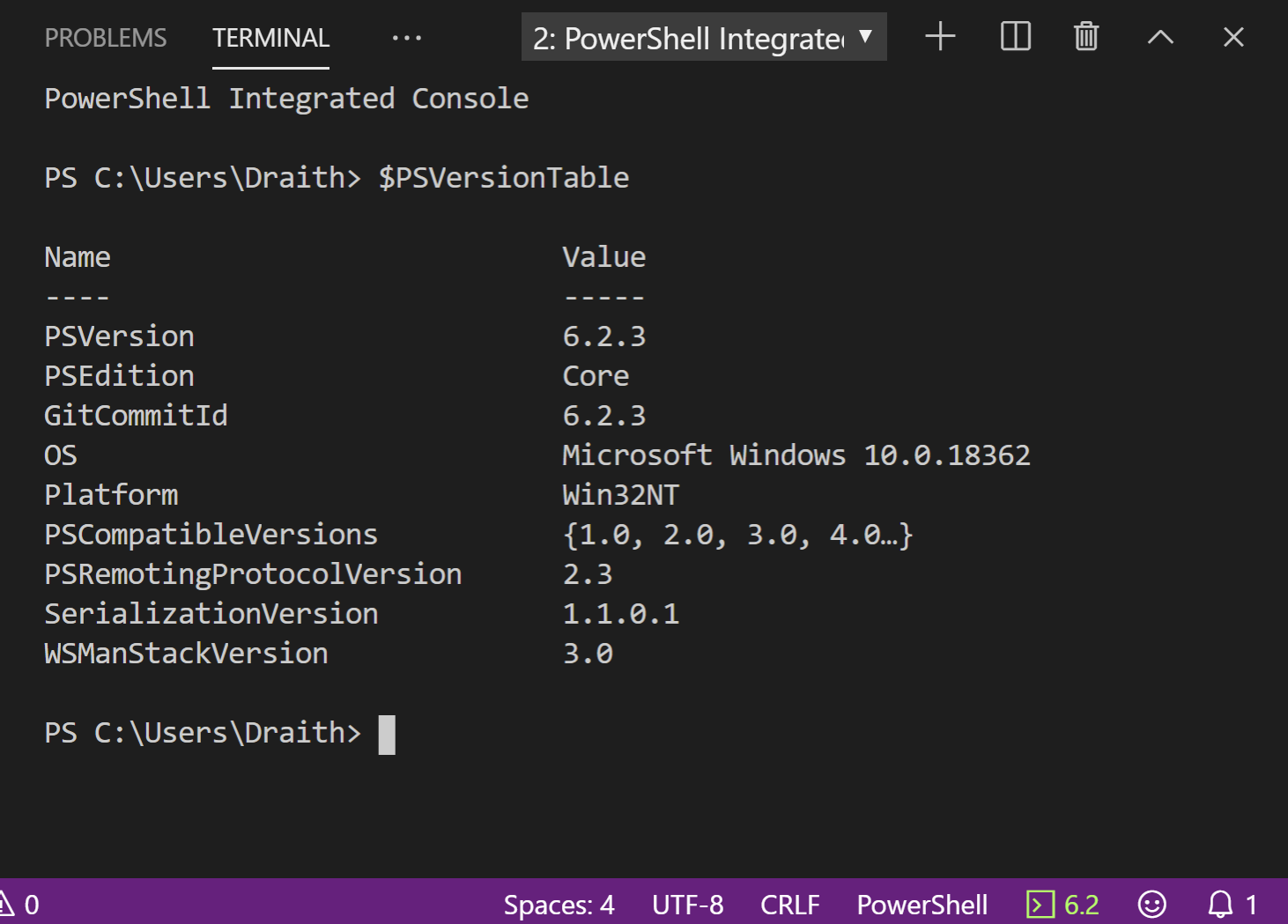

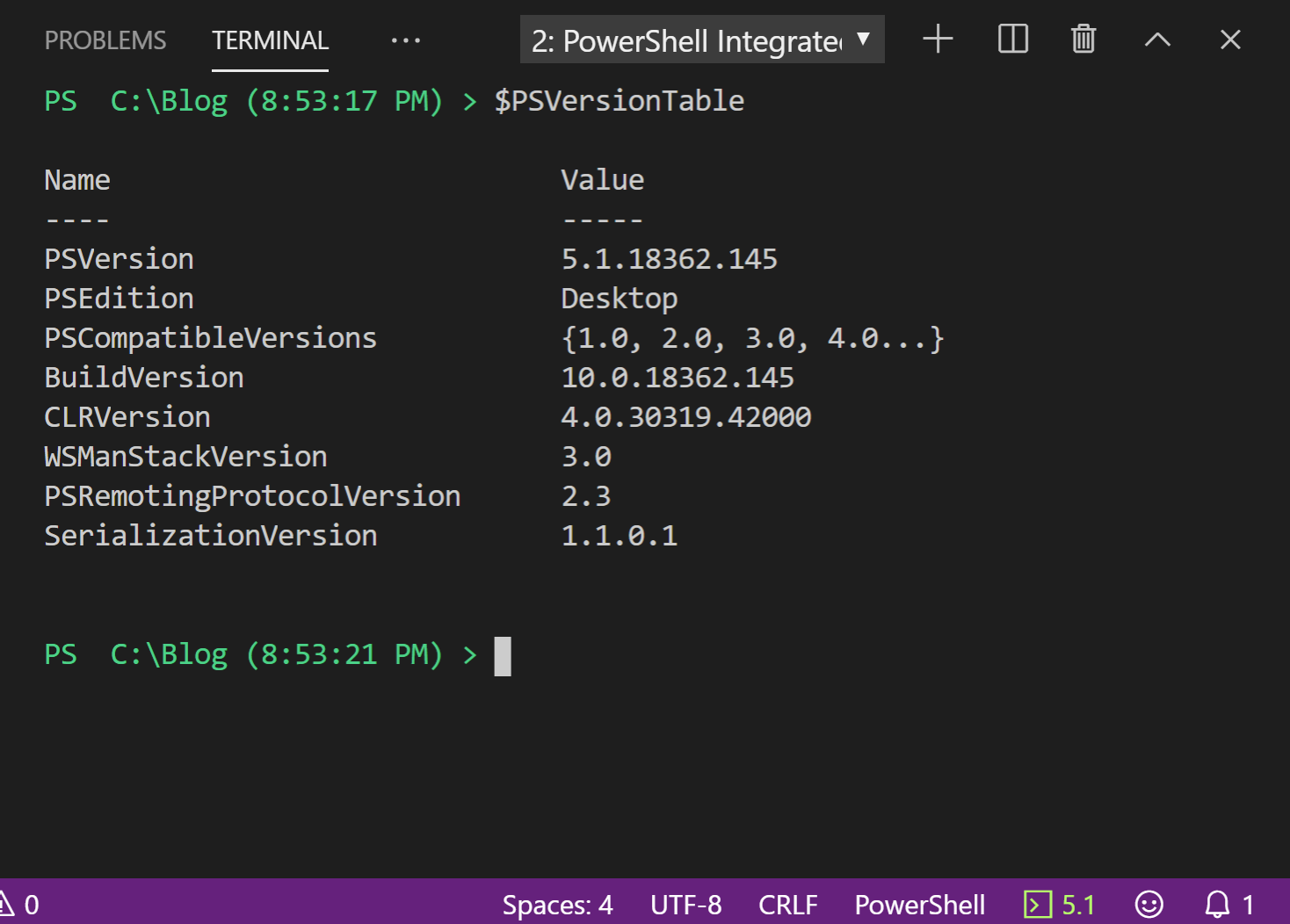

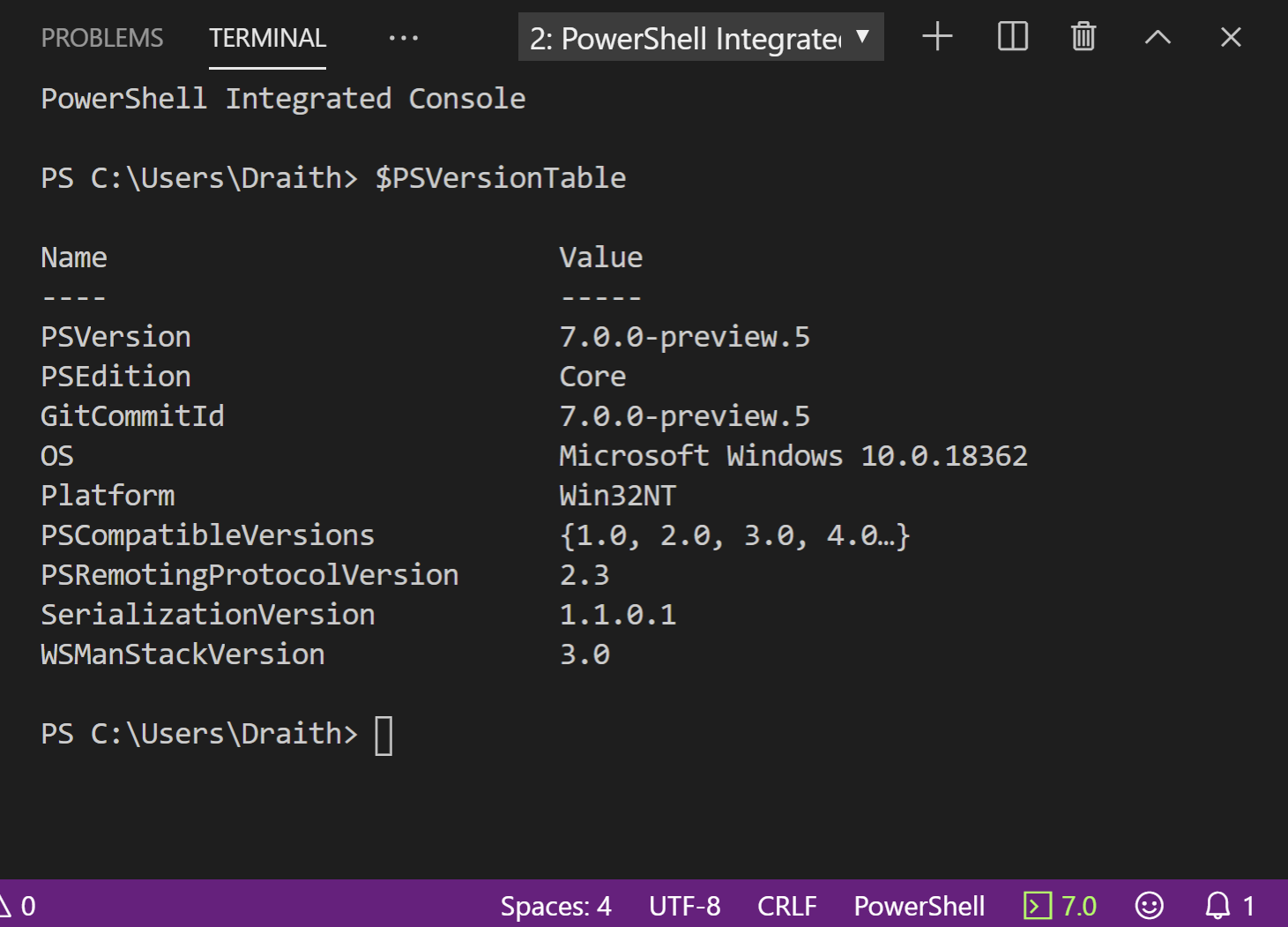

- PowerShell 7.x or higher installed on your system.

- Azure PowerShell module. Install it by running

Install-Module -Name Az -AllowClobberin your PowerShell session.

Use Connect-AZAccount to get into your subscription, then run this to create a new RG and Cognitive Services resource:

$resourceGroupName = "<YourResourceGroupName>" $location = "EastUS" $cognitiveServicesName = "<YourCognitiveServicesName>" # Create a resource group if you haven't already New-AzResourceGroup -Name $resourceGroupName -Location $location # Create Cognitive Services account New-AzCognitiveServicesAccount -Name $cognitiveServicesName -ResourceGroupName $resourceGroupName -Type "CognitiveServices" -Location $location -SkuName "S0"

It’s that simple!

To interact with Cognitive Services, you’ll need the access keys. Retrieve them with:

$key = (Get-AzCognitiveServicesAccountKey -ResourceGroupName $resourceGroupName -Name $cognitiveServicesName).Key1

With your Cognitive Services resource set up and your access keys in hand, you can now interact with various cognitive services. Let’s explore a couple of examples:

Text Analytics

To analyze text for sentiment, language, or key phrases, you’ll use the Text Analytics API. Here’s a basic example to detect the language of a given text:

$text = "Hello, world!"

$uri = "https://<YourCognitiveServicesName>.cognitiveservices.azure.com/text/analytics/v3.1/languages"

$body = @{

documents = @(

@{

id = "1"

text = $text

}

)

} | ConvertTo-Json

$response = Invoke-RestMethod -Uri $uri -Method Post -Body $body -Headers @{

"Ocp-Apim-Subscription-Key" = $key

"Content-Type" = "application/json"

}

$response.documents.languages | Format-Table -Property name, confidenceScoreSo this code will try and determine the language of the text submitted. The output might look like this:

Name ConfidenceScore ---- --------------- English 0.99

Let’s try computer vision now:

Computer Vision

Azure’s Computer Vision service can analyze images and extract information about visual content. Here’s how you can use PowerShell to send an image to the Computer Vision API for analysis:

$imageUrl = "<YourImageUrl>"

$uri = "https://<YourCognitiveServicesName>.cognitiveservices.azure.com/vision/v3.1/analyze?visualFeatures=Description"

$body = @{

url = $imageUrl

} | ConvertTo-Json

$response = Invoke-RestMethod -Uri $uri -Method Post -Body $body -Headers @{

"Ocp-Apim-Subscription-Key" = $key

"Content-Type" = "application/json"

}

$response.description.captions | Format-Table -Property text, confidence

This code is trying to describe the image, so the output might look like this – pardon the bad word wrap:

Text Confidence ---- ---------- A scenic view of a mountain range under a clear blue sky 0.98

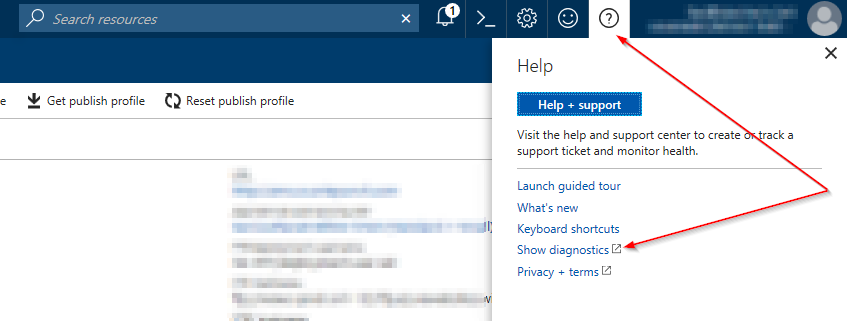

To learn more about Cognitive Services – check out the Docs!