You’ve got 40gb of log files, a broken app, and a CFO reminding you how much money the company is losing per minute. You’ve got to find that one error in one log that will clue you in on how to fix the issue. You have no idea where it is, but you know you have this issue in the bag. How? Because you have PowerShell. I’m going to show you how.

We’ve all been there – a task that has to be done across hundreds of systems, a search of thousands of files, pulling a property from tens of thousands of AD user accounts. PowerShell can do it, but there is always a need to shave those seconds off. In this article we examine how to perform these large action in the quickest possible ways.

Asynchronous Processing

PowerShell has a couple of options when it comes to running tasks in a ‘multi-threaded’ fashion. The two you will primarily hear about are workflows and runspaces (jobs are another topic). Workflows are dead easy to setup, but can be picky about what they will and will not allow to happen in them. Workflows do have the nice feature of sequencing – being able to tell part of the workflow to run in sequence, then run other parts in parallel. Runspaces are more difficult to setup initially, but allow essentially any action you desire. Runspaces also allow for insane parallel processing. In my personal preference, runspaces are always in my toolbox. It becomes a no-brainer when you combine some of the work that the PowerShell heavy-weights have done to make runspaces super easy. Mainly Boe Prox and Warren F. These guys are serious rock-stars.

Invoke-Parallel – Your new best friend

When you absolutely, positively have to burn up those CPUs and flood the network, you need Invoke-Parallel. Seriously, download it now. I made that a link for a reason. Go get it. Using this beast, we can run multiple commands against 20,000 remote server nodes every evening. We can put thousands of SCOM nodes into maintenance mode in a matter of minutes, or search hundreds of directories with thousands of files in a matter of seconds. This is one function that will elevate your PowerShell game. It’s all built on runspaces, and has some amazing logic wrapped around it.

From Github you will get a .ps1 file. You can either pull the function out of that file and include it in your script, dot-source the whole .ps1 file (. “C:\temp\invoke-parallel.ps1”), or take the function and wrap it up in a module. That is my preferred method, since I wrap it up with other useful functions. Regardless of how you reference the function, calling it is easy. Here is a simple example:

. "c:\blog\Parallel\invoke-parallel.ps1"

$servers = 'server1','server2','server3'

Invoke-Parallel -InputObject $servers -ScriptBlock {Test-Connection $_ -Count 1 -Quiet}

This is pretty straight forward. I am dot-sourcing the ps1, Generating an array that has 3 servers in it, and then sending that array to the Invoke-Parallel function as the InputObject parameter. This couldn’t be easier, and guess what? You just ‘multi-threaded’ a PowerShell script. Pat yourself on the back, and then buy Boe and Warren a drink the next time you see them.

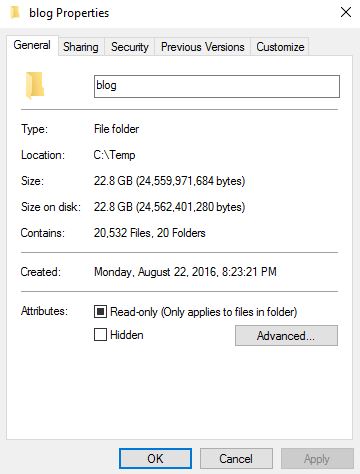

Now invoking these runspaces doesn’t come free – there is an overhead and startup time associated with starting a runspace, and that might actually be a detriment to your outcome. For example, if I have 20,000 log files that are relatively small (10mb or less), and you need to do a “select-string -pattern ‘something'”, then it might not be advantageous to run invoke-parallel. Let’s look at the time it takes to find an error in one of those logs files with each method. In a previous blog post, I created a function to create a lot of log files with random data – I am using that here to create 20,000 log files of about 1mb in size. (side-note: I will later be expanding that function to take advantage of invoke-parallel).

I have edited a random file and added this line somewhere in the middle:

2016-08-21–ERROR–TOO MANY FILES, IDIOT.

I have idea which one I edited. That’s how dedicated I am to this cause. Now, let’s measure how long it takes to find this string both with and without invoke-parallel.

Without:

PS C:\blog\Parallel> Measure-Command{Get-ChildItem c:\Temp\blog -recurse | Select-String -pattern "ERROR"}

Days : 0

Hours : 0

Minutes : 2

Seconds : 11

Milliseconds : 966

Ticks : 1319662757

TotalDays : 0.00152738745023148

TotalHours : 0.0366572988055556

TotalMinutes : 2.19943792833333

TotalSeconds : 131.9662757

TotalMilliseconds : 131966.2757

With:

Measure-Command{

. "c:\blog\Parallel\invoke-parallel.ps1"

$files = Get-ChildItem c:\Temp\blog -Recurse

Invoke-Parallel -InputObject $files -Throttle 8 -ScriptBlock { $_ | Select-String -Pattern 'ERROR' }

}

Days : 0

Hours : 0

Minutes : 4

Seconds : 1

Milliseconds : 880

Ticks : 2418807160

TotalDays : 0.00279954532407407

TotalHours : 0.0671890877777778

TotalMinutes : 4.03134526666667

TotalSeconds : 241.880716

TotalMilliseconds : 241880.716

Because the files are small, the Select-String can process them faster then we can spin up new runspaces. But, if we change the size of the files – say to something like 1GB, the difference is dramatic.

Without Invoke-Parallel:

PS C:\blog\Parallel> Measure-Command{Get-ChildItem c:\Temp\blog -recurse | Select-String -pattern "ERROR"}

Days : 0

Hours : 0

Minutes : 5

Seconds : 12

Milliseconds : 368

Ticks : 3123680771

TotalDays : 0.00361537126273148

TotalHours : 0.0867689103055556

TotalMinutes : 5.20613461833333

TotalSeconds : 312.3680771

TotalMilliseconds : 312368.0771

And with:

Days : 0 Hours : 0 Minutes : 2 Seconds : 15 Milliseconds : 444 Ticks : 1354442030 TotalDays : 0.00156764123842593 TotalHours : 0.0376233897222222 TotalMinutes : 2.25740338333333 TotalSeconds : 135.444203 TotalMilliseconds : 135444.203

It halved the time it took to process those files. Why? Because we could load up multiple select-strings at a time as each was long-running. Each individual select-string is not CPU intensive, it just takes time. In this instance I could process 40GB of files in 2.25 minutes, whereas before I could only do 20gb in 4 minutes. This tells us that runspaces are great for commands or scripts that take a bit longer to run and aren’t horribly CPU intensive.

All of this brings me to the title of this article – when you are dealing with an absolute massive amount of machines, or AD accounts, or large files – whatever the case may be – invoke-parallel should be in your toolbox. At my current job, I have 6 commands to run every night on around 18,000 servers. I can run these through 8 jump servers – I pipe invoke-commands through a large invoke-parallel with a throttle of 80, and can finish this job in about 3 hours. Prior to using invoke parallel, it was taking about 18 hours to complete. That is how you utilize a network.

The main parameters that we typically deal with when using Invoke-Parallel are the InputObject, the Throttle, the ScriptBlock (or ScriptFile), and the Timeout. The InputObject is an array that is the basis for the function. In essence the function will open a runspace for each object in the array. It could be an array of servers, array of users, or a list of files. The throttle is how many runspaces you want running at the same time. Avoid the temptation to set this value too high – it can actually be detrimental if too many runspaces are vying for the same resources (CPU/MEM/Disk). A good rule of thumb for my environment is to limit it to the number of processors on the system running the task. If I am using multiple servers to run tasks, or if the tasks have extremely minimal requirements, I might set it higher. Timeout is how many seconds you want the runspace to run before it is killed. This is typically used to free up runspaces that have encountered a problem – hung commands and such. The last parameter – the ScriptBlock (or scriptfile) is what you want to actually happen in the runspace. Take this example:

$sites = 'www.google.com','www.cnn.com','www.microsoft.com','www.reddit.com'

. "C:\blog\Parallel\invoke-parallel.ps1"

invoke-parallel -InputObject $sites -scriptblock {Test-Connection $_ -quiet}

In this case, the ScriptBlock is a simple test-connection. The $_ is the reference to the current object being processed by this runspace. In this example it was a single url, but it can also be an object with properties, which you would access as any other property ($_.name, $_.Size, etc…). Inside the scriptblock, there are 2 option for accessing variables that are declared outside of the scriptblock. You can either use the ‘$using:variable’ method, or you can specify the ‘-ImportVariables’ parameter for Invoke-Parallel. Along those same lines, if you want to use modules that are imported outside of the runspace, you can use the ‘-ImportModules’ parameter.

This example expands on the script block a bit, and shows how to use the -ImportVariables parameter:

$sites = 'www.google.com','www.cnn.com','www.microsoft.com','www.reddit.com', 'www.powershell.org','www.draith.com','www.bing.com','www.arstechnica.com','www.bbcnews.com'

$texttofind = 'PowerShell'

. "C:\blog\Parallel\invoke-parallel.ps1"

invoke-parallel -InputObject $sites -ImportModules -ImportVariables -scriptblock {

if (Test-Connection $_ -quiet -count 1)

{

try

{

$result = Invoke-WebRequest -Uri $_ -UseBasicParsing|select-string -Pattern $texttofind

if ($result)

{

Write-output $_

}

}

catch

{

Write-Verbose 'Error getting site data.'

}

}

}

These are the basics of Invoke-Parallel. If you have any questions, feel free to leave a comment or ping me via email. In a future post we will go over jobs and how they compare to runspaces. See you then!

Again – special thanks to Boe Prox and Warren F. You guys make this stuff look easy.