There are a ton of articles that detail how to get all sorts of data into Log Analytics. My friend Cameron Fuller has demonstrated several ways to do it, for example. Heck, I even wrote a post or two.

Recently it occurred to me that I hadn’t read a lot of articles on WHY you want your data in Log Analytics. For people that already have data being ingested it’s obvious, but if you haven’t started down that road yet you might be wondering what all the hype is about. This article is for you.

I will tell you right now – Log Analytics is the ‘gateway drug’ of Azure. One hit, and you are hooked. Once you get your data into Log Analytics the possible uses skyrocket. Let’s break down some of the obvious ones first.

Analysis

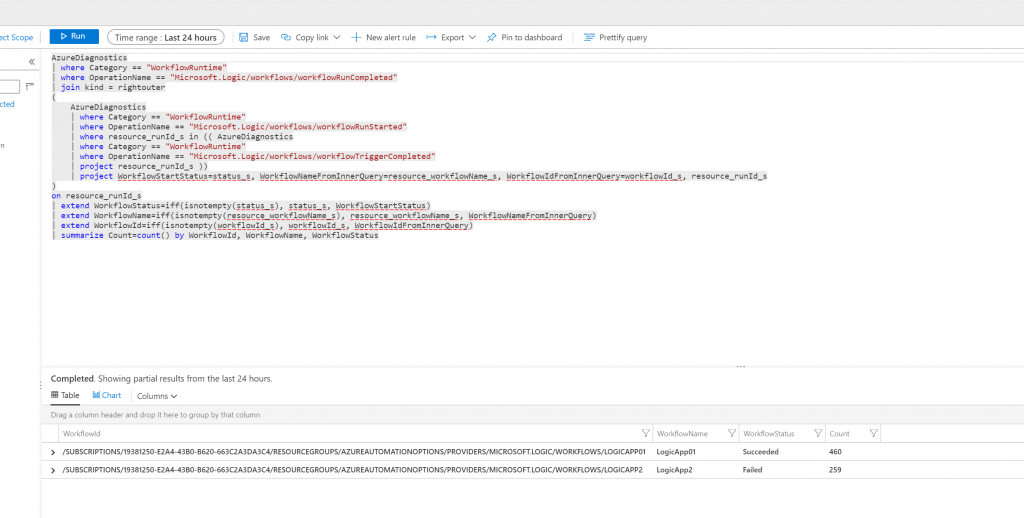

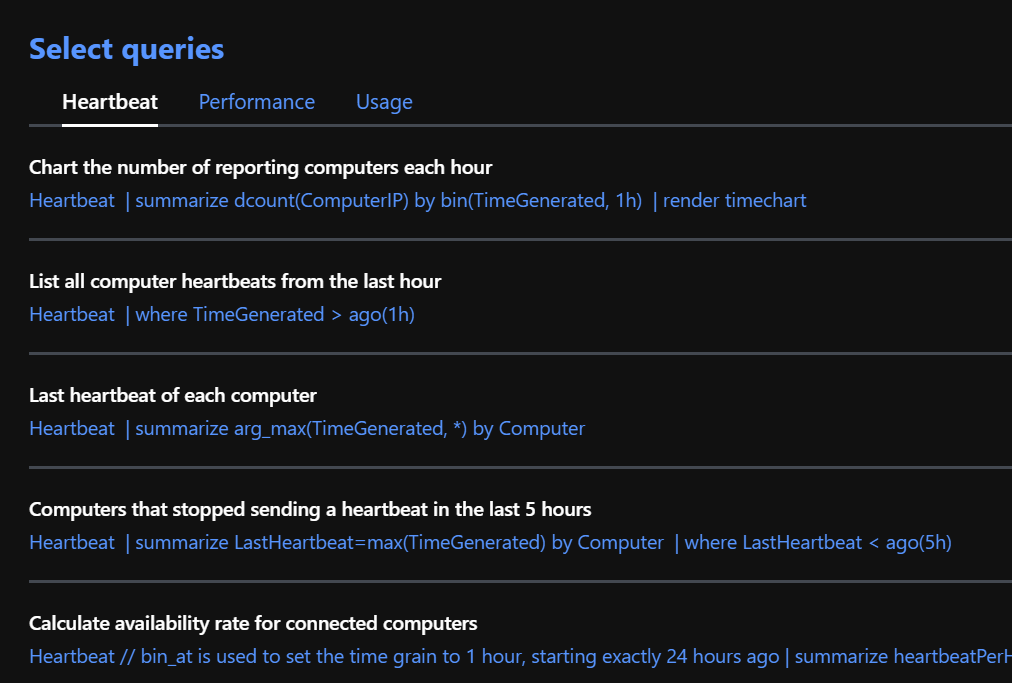

This one is the get’s the “Duh” award. Get the data into Log Analytics immediately let’s you use the Azure Data Explorer Query Language (aka at one time as Kusto).

The language is easy to understand, easy to write, and there are tons of examples for doing everything from simple queries to complex monsters with charts, predictions, and cross resource log searches. This is the first, and the most obvious benefit of data ingestion. Not to diminish the capability offered by stopping here, but if this is the extent of the usage of your data then you are missing out.

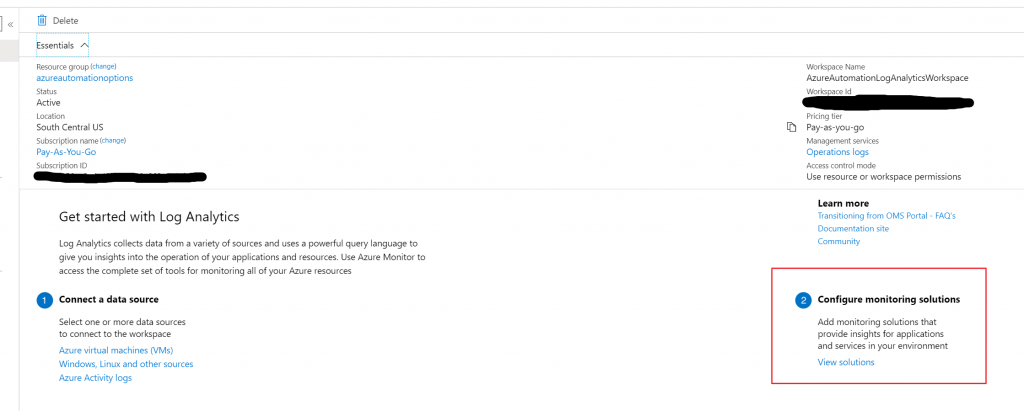

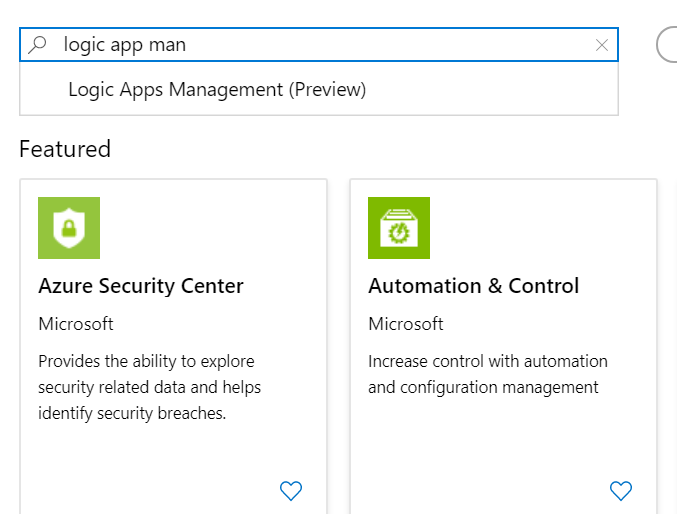

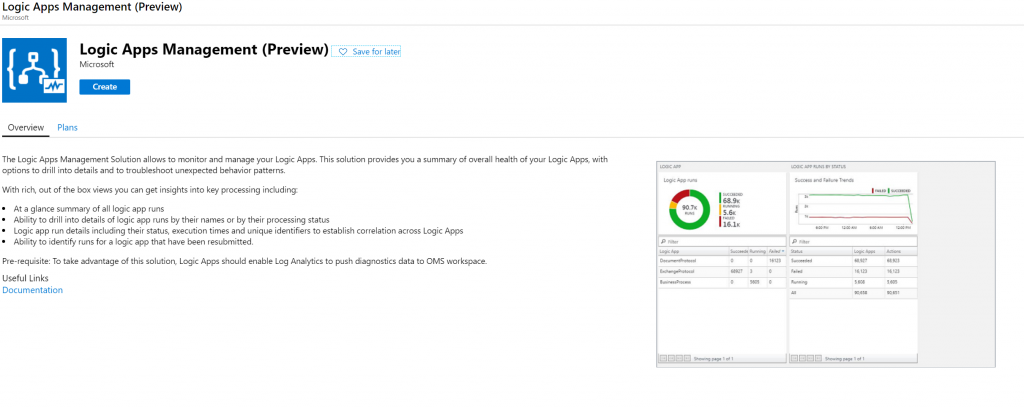

Solutions

Built right into Log Analytics is set of amazing pre-built solutions that can automatically take your logs and turn it into consumable and actionable data points. Need to know how your Operations Manager environment is doing? Connect SCOM to Log Analytics and you are just a few clicks away from seeing performance improvement suggestions, availability recommendations, and even spot upgrade issues before they occur. The SQL Assessment supplies even more actionable data across your entire connected SQL environment. Most of the solutions come with exquisitely details recommendations. Check out this example from my personal SQL Assessment.

There are many different solutions, and they are being added all the time. Container analysis, upgrade assessment, change tracking, malware checking, AD replication status – the list of solutions is amazing! Even better, the product team that builds these solutions wants to know what you want to see! Go here to see the full list of solutions currently available, and check out the link at the bottom of the page to leave your suggestions.

The not so obvious, and the most fun!

Ok – we’ve knocked out the most obvious usages, but now let’s look at some of the other fun uses!

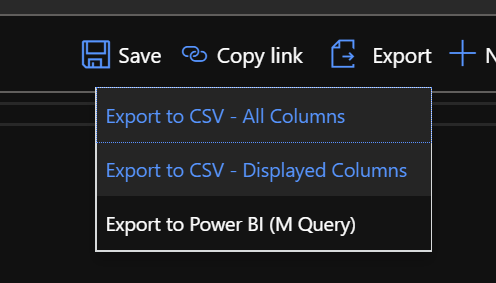

Log Analytics queries can be directly exported and imported into PowerBI! Simply craft your query, click export (see below) and LA will automatically craft the connection information and query for in a way that PowerBI can understand! All of that data suddenly available to the power of one best BI engines in the business.

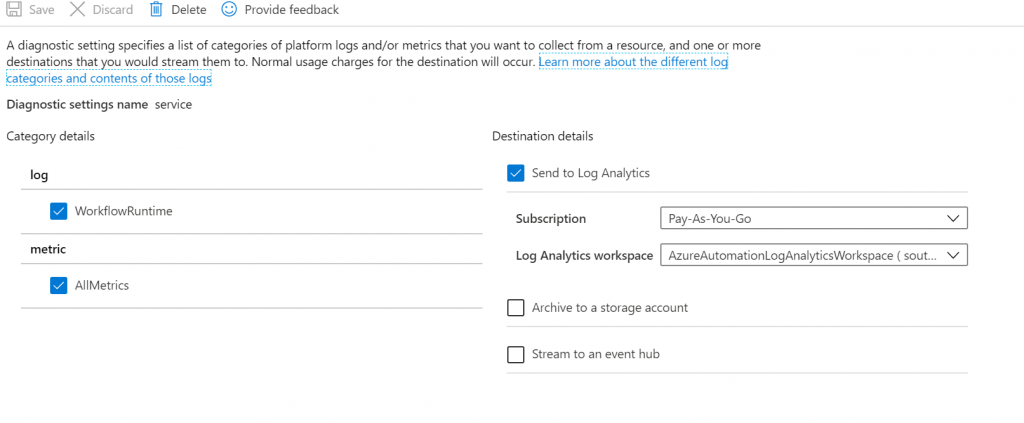

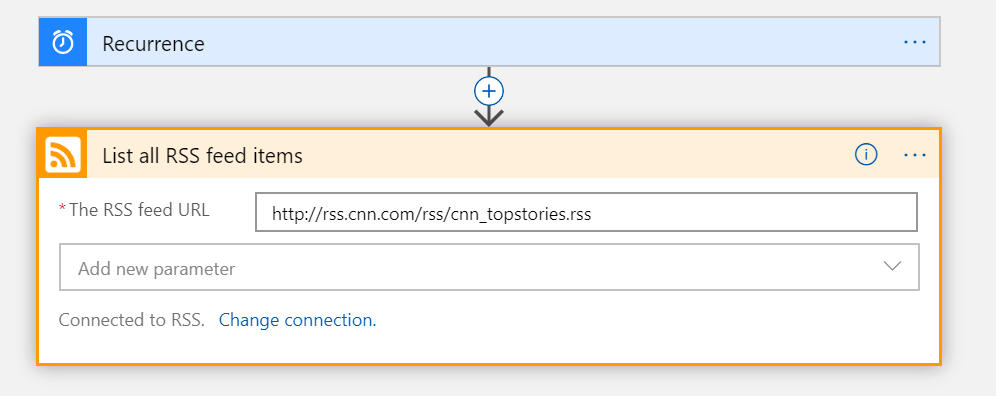

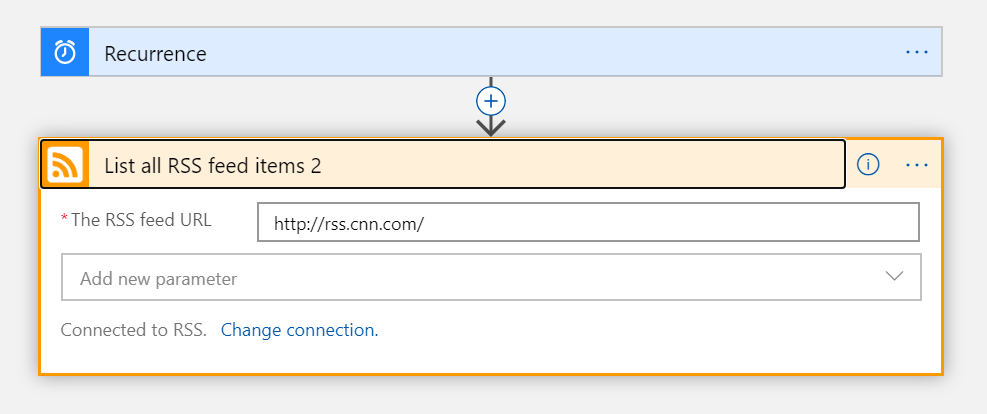

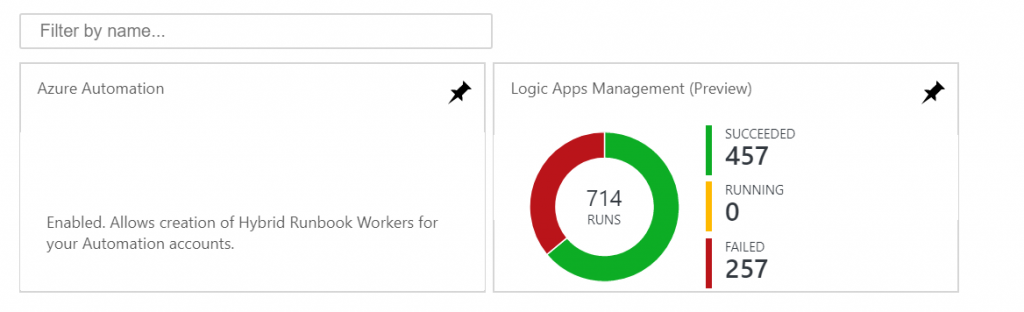

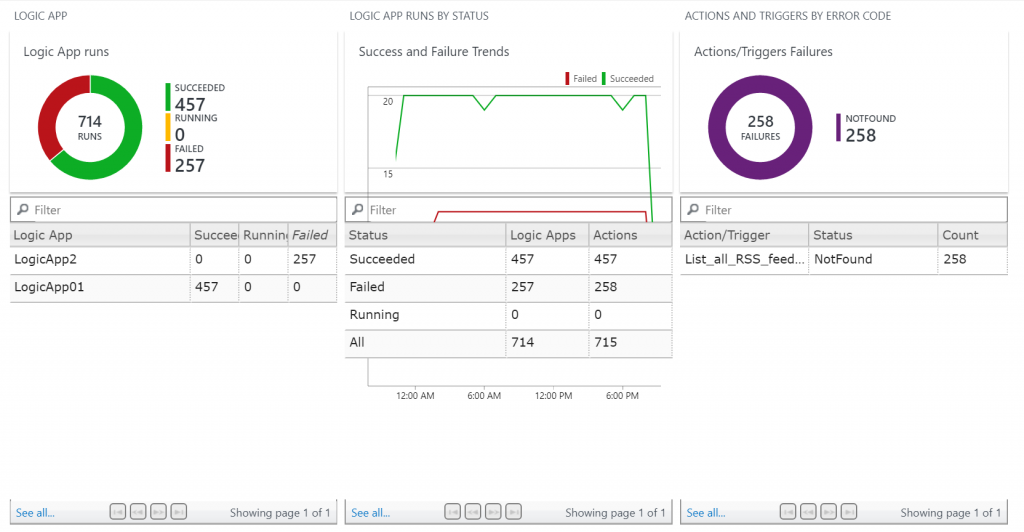

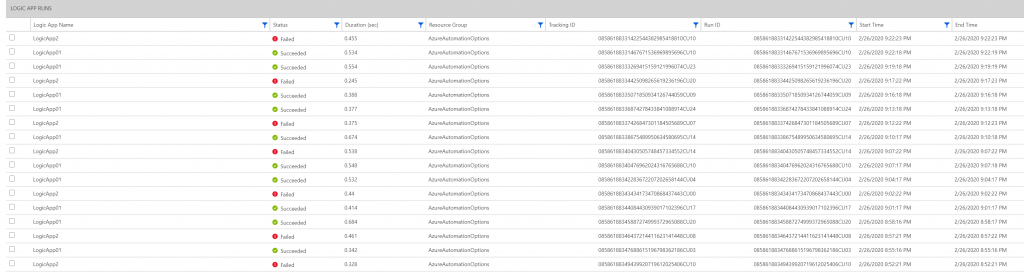

Ok – I can hear you all now. PowerBI is just another reporting type application (it’s not, btw), but what else can we do? How about integration to one of the most powerful set of automation tool-sets in the market? Connectors are available directly in both Flow and Logic Apps that allow you to query your data and trigger from the returned data. This is where your data integration truly starts!

Imagine some of the possibilities for both your on-prem and cloud resources:

- Get texts about critical updates as they are found

- Schedule update installations with a full approval chain

- Send notifications about changes that occur in your environment, sending the notifications to the appropriate teams based on the change type

- Azure Monitor alerts sent straight to Event Grids or Event Hubs for further processing

- Connect your SCOM alerts through LA and right into Azure automation to perform runbooks in the cloud or on-prem

- Consume logs from your building entry system and schedule software distributions when people leave the office